There is a big difference between an application that works OK only in a perfect situation and one that behaves as expected in all circumstances. For the user, the former gives the appearance that the product is almost ready—with some bugs here and there. In reality, making the application reliable is a lot of work for a programmer. One needs to identify all cases that need special attention: errors as well as situations in which the expected result is not obvious.

Thinking about all the things that could go wrong in code execution and preparing for them is a big part of a programmer's job. At the same time, this topic is not necessarily covered enough in learning materials for beginners. Let’s see this process in action in a simple example.

User story

User stories explain what the user wants to achieve with the application we are building. Here, it’s straightforward—divide one number by another, and then show the result on the screen.

Interface

We can assume that the users or our application will be familiar with this notation:

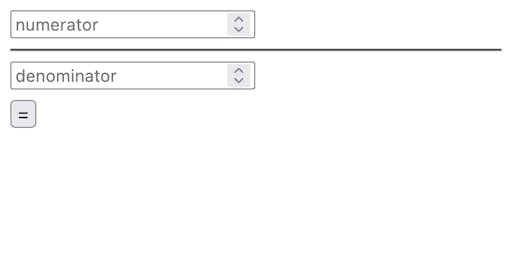

Let’s use this as a basis for the application interface: numerator and denominator become number inputs with the result shown next to them.

Implementation

I've built a simple implementation for the application. It’s available here, and it looks like the following:

The body of index.html defines our view:

<input type="number" id="numerator" placeholder="numerator" />

<hr />

<input type="number" id="denominator" placeholder="denominator" />

<br />

<button id="equals">=</button>

<div id="result"></div>

and the logic is in main.js:

const numerator = document.querySelector("#numerator"),

denominator = document.querySelector("#denominator"),

equals = document.querySelector("#equals"),

result = document.querySelector("#result");

equals.addEventListener("click", () => {

const numeratorValue = parseFloat(numerator.value),

denominatorValue = parseFloat(denominator.value);

const resultValue = numeratorValue / denominatorValue;

result.innerHTML = resultValue;

});

Bugs

The application seems to work OK when you assign reasonable values, but it’s easy to find cases where things start behaving incorrectly.

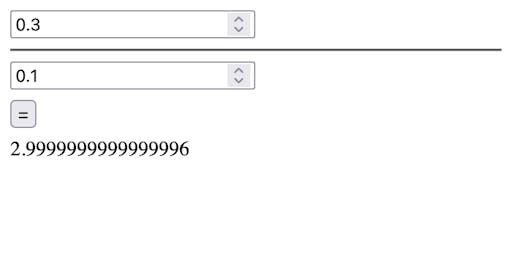

Floating number representation

As you may know, JavaScript stores all the values as floating-point numbers. Like any solution to a nontrivial problem, it comes with trade-offs: in the case of floating-point numbers representation, the big downside is that numbers are represented by their approximate value. When we want to store a precise value—for example, 3—in reality we are storing a value that is extremely close to 3. The differences are mostly negligible, but during mathematical operations they compound and can become noticeable. For example, dividing 0.3 by 0.1 has a surprising result in JavaScript:

>> 0.3/0.1

2.9999999999999996

The same result will be displayed to the users of our application:

There are few approaches that could be used to address this behavior. One obvious solution would be to add some precision in our calculations and round the result. When we deal with money, we need to know the result down to 0.01—any difference below it is meaningless.

Blind rounding introduces a new edge case: if the numerator is sufficiently small, at some point the result will round to 0. We could push the issue further, but by rounding smartly: to try to leave a few meaningful digits in the result. This would complicate the solution, but depending on the use cases we want to support, it may make sense.

Max safe integer

Floating-point numbers allow keeping a wider span of values when compared to integer numbers—the downside is the imprecise representation we discussed above. As the number values deviate further from 0, the impression becomes bigger as well. At some point, JavaScript cannot keep integer values reliable for us—the impression is big enough that two different integers are represented in the same way. JavaScript keeps the edge value as:

>> Number.MAX_SAFE_INTEGER

9007199254740991

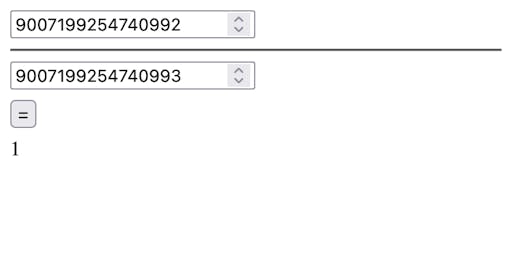

From that point on, our calculations become unreliable, as you can see here:

One value ends with 2, another with 3; but our application presents the division result as being equal to 1.

We could address this problem in a few ways:

- limit the maximal value user can introduce to the app

- change the data representation to BigInt—thereby supporting large integer values but at the cost of giving up support for fractions

As you see, again, there is no perfect solution here: depending on the supported use cases, one or another approach can be better.

Issues

Besides obviously wrong behaviors, there are more subtle issues with the application in the current state.

Unspecified behavior for non-numbers

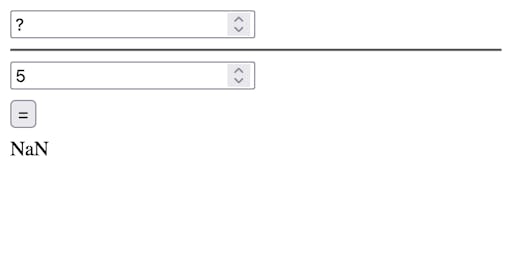

In our app, we plugged the user's input directly into the parseFloat method, without any attempt at dealing with errors. The following input values will break the application:

1—trailing space in the input1,0—wrong separator0x1—hexadecimal notation of number 11e0—scientific notation of 1LXIV—roman number for 64one

For each of those values, you could evaluate the cost and benefit of supporting this kind of input. When done right, you can get better use experience in exchange for a bit of effort during the programming. At the same time—more supported formats means more edge cases, and if resolved incorrectly, they can lead to unexpected application behaviors.

Lack of feedback in UI

For the input, we have used <input type=”number”>. This type of input does a bit of data validation—our nominator.value will be equal to an empty string whenever the user introduces anything more than the number. It would be nice to provide some visual feedback when the input value is incorrect: for example, by adding a red border to the element.

Unfriendly UX for errors

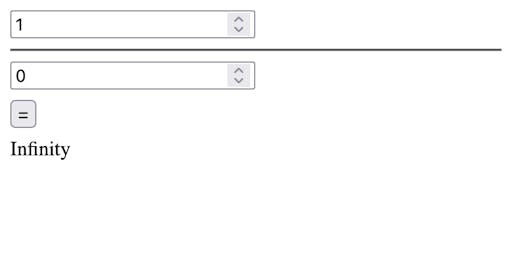

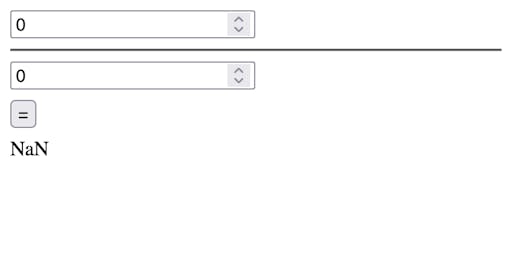

In the case of errors, our application tries to calculate the result and shows the error in one of many ways:

There are a few things to consider:

NaN(not a number) &Infinityare what JavaScript chooses as a result for different incorrect operations. These assumptions are not necessarily optimal for our use case—perhaps we want to always showNaNor show no result.- It would be nice to show some kind of error message to explain what went wrong.

Again, the best approach depends on the context in which the application will be used.

Enter keyboard

While testing the application, I tried a few times to run a calculation by pressing Enter. Supporting this feature would make for better user experience (UX).

Want to learn more?

In this article we have seen how many issues can occur even in the simplest application. Once we know how the application should behave in all edge cases we found, the next step will be to implement that behavior and make sure it stays this way. In the follow-ups, we will see different automated means of quality control. If you are interested in receiving updates about my new articles, sign up here.